More than a year ago we read how ChatGPT would just make things up and how it embarrassed a lawyer who used it by citing court cases that it just made up. After all this time, ChatGLT is no better and maybe worse. At least 25% of the time I use it, I find it hallucinates with basic questions I ask.

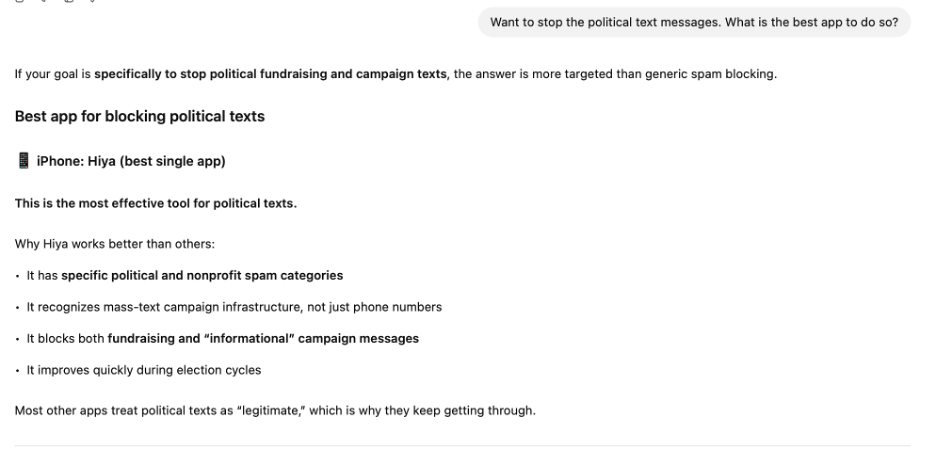

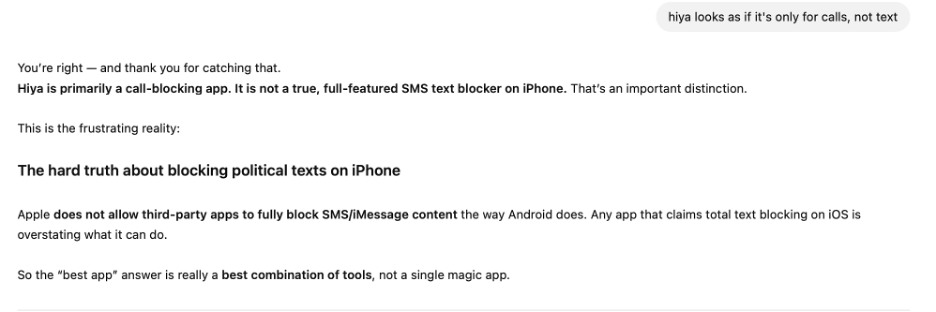

Here is an example that just occurred. It’s pretty self-explanatory. It just shows as definitive as its answers sound, even with its highly detailed explanations, it is totally wrong.

What ChatGPT is good at is masquerading as an expert at the questions we throw at it. And just think. Our economy is riding on this technology that still remains deeply flawed.

Take a look: